By Chris Kremidas-Courtney, Senior Fellow for Peace, Security & Defence at Friends of Europe and Lecturer for Institute for Security Governance (ISG) in Monterey, California

2024 will be a pivotal year of elections in the democratic world with EU parliamentary elections; US presidential and congressional elections; national elections in Belgium, Croatia, Finland, Georgia, India, Lithuania, Mexico, Moldova, North Macedonia, Romania, Slovakia, South Korea and Taiwan; and regional elections in Austria, Australia, Canada, Germany, Spain and the United Kingdom. Each of them could take place under a cloud of AI-driven disinformation.

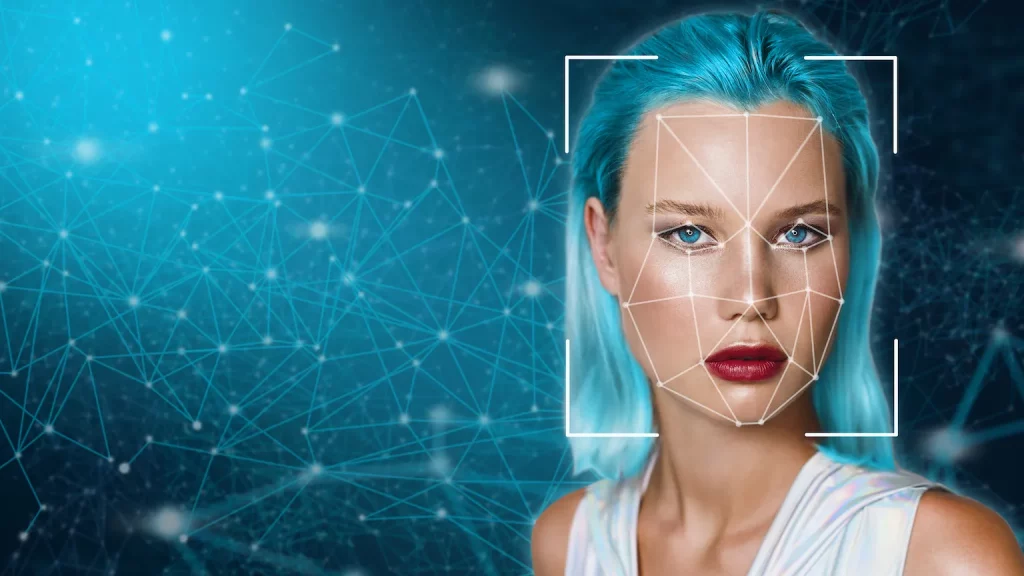

While Europe and the rest of the West have learned much about how to debunk and counter disinformation, new technologies are arriving on the scene that are radically changing the information landscape. Among them are extended reality (XR), AI and neurotechnology. The one projected to have the greatest impact on the 2024 election cycle is AI.

Since disinformation actors start priming audiences for elections as many as two years in advance, we’re already seeing AI being used in the Republican presidential primary race in the US with the party airing AI-produced adverts depicting fake disasters they claim would happen if US President Joe Biden is re-elected. Meanwhile, Republican primary candidates Donald Trump and Florida Governor Ron DeSantis are already using deep fakes against each other online.

In Europe, companies such as Deutsche Telekom, Maybelline and Orange (working with Fédération Française de Football) have created deep fake video adverts for both public safety announcements and to sell products.

While these kinds of AI-generated adverts are concerning, the greater risk is the ability for large language models (LLM) such as GPT-4 to be used to generate thousands of messages to flood social media sites, creating false constituencies to not only mislead audiences but to confuse and frustrate the general public, leaving them unable to discern truth from false information.

AI-driven disinformation also allows for campaigns to be more effective in various languages since they are not limited by the language abilities of a live troll farm. In 2016, the average social media user could often tell by the clumsy use of a language that the account was likely a foreign-based troll. Today, AI-driven accounts used by malign actors can write articles and post comments in numerous languages with native fluency.

Another AI advance that could feed a new era of disinformation is conversational AI (CAI), a technology that enables real-time engagement between an AI system and a person. Through this engagement, the AI system can effectively target and influence a person with a message tailored just for them. Then it senses their reactions and adjusts its approach to maximise impact over time.

As a result, these systems not only adapt to a person’s immediate verbal and emotional responses but also become more adept at ‘playing’ the person over time. CAI systems can draw people into conversation, guide them to accept new ideas and ultimately influence them to believe in disinformation. When used at scale, CAI could be the perfect manipulation tool.

Meanwhile, the ability to make convincing deep fake videos continues to advance rapidly, with the recent development of MagicEdit by TikTok’s parent company ByteDance. MagicEdit enables rapid and high-quality video editing of images using openly available AI to convert an existing video into a convincing deep fake.

Numerous reports by EU and US authorities have warned that Russia, China, Iran and others are stepping up their efforts to impact elections in democratic countries, but state actors are not the only ones with access to AI tools to create and spread disinformation.

AI now enables information manipulations and disinformation to be produced at mass scale and at low cost. Recently developers in the United States conducted an experiment to create a sophisticated ‘disinformation machine’ using openly available generative AI. This test bot scanned Twitter (X) for specific words and topics, then responded with a counternarrative by “creating fake stories, fake historical events, and creating doubt in the accuracy of the original article.”

It also generated backup content with fake profiles of writers, photographs and AI-generated comments on the articles themselves to make them look more authentic. The total cost for this capability? Only $400.

Governments allow technology to develop without much regulation over fear they will stifle innovation

These lower costs to make potent disinformation at scale open the door for even more actors to be involved. Combine this capability with microtargeting data available on either the dark web or open market and nonstate and individual actors could have information manipulation capabilities on par with some governments.

In the same way, the democratisation of technology is making sophisticated drones and advanced weapons available to private citizens, so too are sophisticated AI tools now available for malign and mischievous state, non-state and individual actors to mass produce and distribute convincing disinformation.

All of these factors point to an even greater threat to democracy during the 2024 election year since the potential for our information ecosystem to be flooded with ‘high-quality’ disinformation is skyrocketing.

And despite our best efforts at detection and debunking, these new AI-driven disinformation capabilities used at scale will be created faster than authorities, media companies and civil society groups can fact-check and debunk them.

All of this is happening at a time when big tech companies like Facebook, YouTube and Twitter (X) seem to be surrendering to disinformation by cutting content moderation staff and loosening standards.

As some have been warning over the past few years, AI is developing faster than regulation can keep up. In many ways, we are repeating the same mistakes of the recent past in which governments allow technology to develop without much regulation over fear they will stifle innovation. We then watch for months and years as new technology weakens democracy, divides society and privacy erodes. And only after much damage has been done do we summon the will to enact regulations like the EU’s AI Act or Digital Services Act – both of which are quickly being outpaced themselves.We can’t make this mistake again.

Clearly, while media literacy and education are invaluable, they are not enough to address how citizens can navigate an information ecosystem in which machines will soon outnumber humans on the internet; a world in which facts and reality may no longer be single objectively verifiable perspectives.

We need effective guardrails and we need them fast. Fighting AI-driven disinformation using AI is a possible solution, but according to some experts, doing so is neither cheap nor very effective. One solution proposed by several top experts is for tech companies to use watermarking to identify AI-produced or AI-altered videos, photos or text.

According to Paris-based technology expert Naomi Roth: “Invisible watermarking involves embedding information within the content, in the metadata, that one or an algorithm can consult to identify its source. For example, companies like Nikon and Leica already include features that capture metadata, such as location, when you take a photograph. This ensures that the photograph was taken at a location and time one claims to have taken it. However, implementing invisible watermarking write and read capacities across all devices and information consumption systems would be a significant undertaking.”

Safeguarding citizens and societies from advanced forms of cognitive manipulation must also be seen as a human rights issue

To be better prepared for 2024, Roth argues, “It may be prudent to prioritize developing watermark and labeling systems for audio-visual content first in the event of coming elections. It is, however, important to note that there is no guarantee that watermarks will be positively perceived or trusted by the public. They could even erode trust in genuine and fair human-generated content that does not adhere to specific protocols because their authors lacked the means or needed to protect themselves against an authoritarian regime.”

Recently Google launched a watermarking tool called SynthID for AI-generated images. According to AI pioneer Louis Rosenberg, “It sounds like a great thing except it only works for their image generation tool [Imagen] and worse, it’s optional for users. Watermarking has no point unless it’s enforced and widespread. And even then, there is a reasonable chance it gets defeated by increasingly powerful countermeasures.”

And even these solutions are not airtight since the code for some AI models (without watermarking) is already out in the open, so they could still be used by some malign actors.

An additional solution is for tech companies, governments and civil society groups to step up their efforts and upgrade their capabilities for content moderation and debunking. For the private sector, this means reversing the trend of cutting moderation staff and loosening standards.

Governments can and should provide incentives for media and tech companies to moderate content and prevent the information ecosystem from being flooded with AI-generated disinformation. They should also enact stiff penalties for failing to do so. It will take both carrots and sticks to prevent the further erosion of democracy and social discourse, especially in an era of cheap access to the ability to generate disinformation using AI.

In the end, safeguarding citizens and societies from advanced forms of cognitive manipulation must also be seen as a human rights issue. So, it’s time to update our idea of human rights to include the right to cognitive self-determination; the right to make our own decisions without extensive manipulation; and to exercise free will in an environment where we can discern truth and reality from lies and fabrications.